GPT-NeoX

GPT-NeoX

GPT-NeoX is optimized heavily for training only, and supports checkpoint conversion to the Hugging Face Transformers format.

Pricing

Free

Tool Info

Rating: N/A (0 reviews)

Date Added: April 23, 2024

Categories

LLMsDeveloper Tools

Description

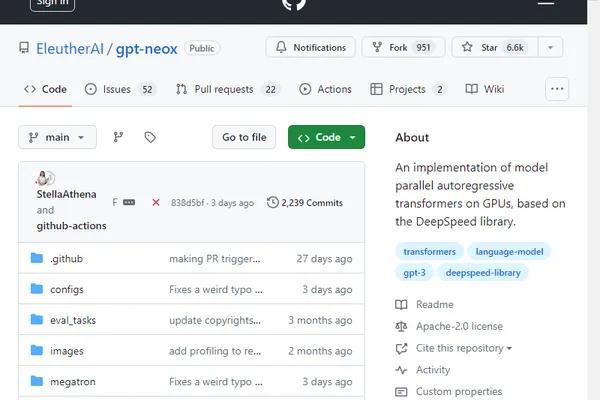

GPT-NeoX is a tool that is specifically optimized for training language models. It is not designed for inference or production use. The tool focuses on maximizing training performance and supports checkpoint conversion to the Hugging Face Transformers format for easy loading and sharing with end users. GPT-NeoX is based on the DeepSpeed library and provides an implementation of model parallel autoregressive transformers optimized for GPUs.

Key Features

- Optimized for training language models.

- Supports checkpoint conversion to Hugging Face Transformers format.

- Provides implementation of model parallel autoregressive transformers.

- Built on the DeepSpeed library.

Use Cases

Reviews

0 reviews

Leave a review